Policing Content in the Quasi-Public Sphere

September 2010

Authored by Jillian C. York, with contributions from Robert Faris and Ron Deibert, and editorial assistance from Rebekah Heacock

To view this bulletin as a PDF, click here.

Introduction

Online conversations today exist primarily in the realm of social media and blogging platforms, most of which are owned by private companies. Such privately owned platforms now occupy a significant role in the public sphere, as places in which ideas and information are exchanged and debated by people from every corner of the world. Instead of an unregulated, decentralized Internet, we have centralized platforms serving as public spaces: a quasi-public sphere. This quasi-public sphere is subject to both public and private content controls spanning multiple jurisdictions and differing social mores.

But as private companies increasingly take on roles in the public sphere, the rules users must follow become increasingly complex. In some cases this can be positive, for example, when a user in a repressive society utilizes a platform hosted by a company abroad that is potentially bound to more liberal, Western laws than those to which he is subject in his home country. Such platforms may also allow a user to take advantage of anonymous or pseudonymous speech, offering him a place to discuss taboo topics.

At the same time, companies set their own standards, which often means navigating tricky terrain; companies want to keep users happy but must also operate within a viable business model, all the while working to keep their services available in as many countries as possible by avoiding government censorship. Online service providers have incentive not to host content that might provoke a DDoS attack or raise costly legal issues.1 Negotiating this terrain often means compromising on one or more of these areas, sometimes at the expense of users. This paper will highlight the practices of five platforms—Facebook, YouTube, Flickr, Twitter, and Blogger—in regard to TOS and account deactivations. It will highlight each company’s user policies, as well as examples of each company’s procedures for policing content.

Political Activism and Social Media

The Internet can be a powerful public venue for free expression. Early idealist observers imagined the Internet as an unregulated forum without borders, able to bypass laws and virtually render the nation-state obsolete. Governments meanwhile sought to assert authority over cyberspace early on. However, the growth of private Internet platforms has spread the debate over content regulation to privately hosted Internet sites.2

Over the course of the past few years, social media in particular has emerged as a tool for digital activism. Activists from every region of the world utilize a variety of online tools, including Facebook, YouTube, Twitter, Flickr, and Blogger, in order to effect political change and exercise free speech, though they often face numerous obstacles in doing so.

Facebook has become a tremendously popular tool for activism; however, its popularity in some places has also been its downfall: the site is blocked in several countries, including Syria, where—prior to it being blocked in 2007—experts called the site a “virtual civil society.” Nevertheless, it is used globally to organize protests, create campaigns, and raise money for causes.

YouTube’s easy-to-use interface means that anyone with a video camera can become a citizen reporter. Morocco’s ‘Targuist Sniper’ is one such example; in 2007, he exposed police corruption in the southern part of the country by videotaping officers asking for bribes. The ‘Sniper,’ who chooses to remain anonymous, has inspired numerous others to follow in his footsteps, including the Moroccan authorities, who were allegedly so inspired by his tactics that they have begun using those tactics in order to combat corruption.3

At times, companies must adhere to the laws of other countries in which they operate, or risk their sites being blocked, as Yahoo! famously learned in 2000, when a French court ruled that the company was required to prevent residents of France from participating in auctions of Nazi memorabilia and from accessing content of that nature. Following numerous court cases and a media storm, the company eventually chose to remove all items related to Nazism globally, prohibiting altogether items that “are associated with groups deemed to promote or glorify hatred and violence.” 4

YouTube has been blocked in a number of countries, including Brazil,5 China,6 Syria,7 Thailand,8 Pakistan,9 Turkey,10 and Iran, where the video-sharing tool was blocked in the wake of violent protests following the 2009 elections, during which a citizen-made video showing the death of young passerby Neda Agha Soltan was made famous via YouTube. 11

Since that precedent-setting case, numerous companies have followed suit. For example, Flickr users in Singapore, Hong Kong, India, and South Korea are bound to local terms of service (TOS), which allow them to see only photos deemed ‘safe’ by Flickr staff (as opposed to the rest of the world, where only flagged content is reviewed). German users may only view ‘safe’ and ‘moderate’ photos. 12 Similarly, in order to remain unblocked in Thailand, YouTube utilizes geolocational filtering, preventing access by Thai users to videos that depict the royal family unfavorably, as such videos are considered to have committed the crime of lese majeste. 13

Governments may also request information from private companies about their users, or request that companies remove certain information from their sites. One prominent recent example involved TOM-Skype, a version of Skype available for use in China. Researchers discovered that the full text of chats between TOM-Skype users was being scanned for sensitive keywords, and if present, the keywords were blocked and the chat logs uploaded and stored on servers in China. 14

In 2008, following the arrest of Moroccan Facebook user Fouad Mourtada,15 civil liberties groups suspected Facebook of turning over Mourtada’s information, something the social networking site vehemently denied.16 In a separate case, Chinese journalist Shih Tao was convicted and sentenced to 10 years in prison for sending an e-mail to a U.S.-based pro-democracy website, after Yahoo! provided the Chinese authorities with information about the e-mail.17

On the other hand, Google, which owns both YouTube and Blogger and has come under scrutiny in the past for removing search results at the behest of governments,18 recently provided public access to information on the number of content removals requested by governments, as well as the number of requests with which the company complied, contrasted with the absolute number of requests per country. 19

On private platforms, users are constricted by each site’s TOS. Though TOS vary greatly from one company to another, the same general principles tend to apply: illegal content and anything potentially violating copyright is banned outright; ‘flaming,’ bullying, intimidation, and harassment are often banned as well. In some cases, those behaviors are defined; in other cases, they’re completely subjective, placing the onus on enforcement teams to determine the context of a post and whether or not it’s in violation of a site’s TOS.

The next section of this paper will offer case studies of five different networks’ methods of handling various types of TOS violations.

Background

Since it was founded in 2004, Facebook has grown from a small Harvard-based network into a global one, with more than 500 million active users from nearly every country in the world.20

Although Facebook is a private company, it now occupies a significant role in the public sphere, as a platform on which ideas and information are exchanged and debated. Its policies, however, are often in stark contrast to its perceived role as a public space.

Over the past few years, Facebook has emerged as a tool for digital activists, who have used it to campaign for political and social causes both locally and globally.21 Facebook’s own “Causes” application allows users to spread awareness and ask for funding for favorite causes. The platform is free and offers a large suite of tools, making it an attractive platform for activists who may not be able to afford or who don’t have access to their own domains, as well as those who may feel safer hiding amongst the masses.

Facebook’s popularity as a platform for activism is especially apparent in certain developing countries where more traditional, offline methods for activism are sometimes risky for participants, or where online organizing makes it easier for like-minded people to connect across a greater geographical area. The latter is evidenced by the success of the Pink Chaddi campaign,22 which took place in 2009 in India. The campaign, a revolt of Indian feminists in response to notable incidences of violent conservative and right-wing activism against perceived violations of Indian culture, involved encouraging women to send pink chaddis (or undergarments) to the leader of an ultra-conservative Hindu group.23 The campaign’s success was aided by the organizers’ use of Facebook, however, after multiple hackings of the group page, the organizers decided to stop using Facebook for their campaign, stating that “the first rule of Facebook activism seems to be don’t use Facebook.” 24

Facebook’s TOS

Facebook users are subject to dense TOS, which have come under public scrutiny several times over the course of the past few years, most recently following a February 2009 change that granted Facebook “non-exclusive, transferable, sub-licensable, royalty-free, worldwide license to use any IP content that you post on or in connection with Facebook (“IP License”).” 2526

Though Facebook is available in over one hundred languages, the TOS are available only in English, French, Spanish, German, and Italian. They are fairly typical of social media sites, for example banning users from “bullying, intimidating, or harassing any user” (Safety, 3.6); from posting “content that is hateful, threatening, pornographic, or that contains nudity or graphic or gratuitous violence” (Safety, 3.7), and from “using Facebook to do anything unlawful, misleading, malicious, or discriminatory” (Safety, 3.10).27

Unlike most other online services, Facebook requires that users provide their real names and information,28 a provision that can be problematic for activists, many of whom use pseudonyms to reduce the risk of retribution for their activities. And while no social media service provides a guarantee of anonymity, most allow a relative degree of privacy by permitting users to use pseudonymous screen names.

Account Deactivations

Users who violate Facebook’s TOS are subject to having their postings, profiles, groups, or public pages removed, as stated in the TOS: “We can remove any content or information you post on Facebook if we believe that it violates this Statement.” 29 For claims of copyright infringement, Facebook offers an appeals system. 30

For other possible TOS violations, the appeals system is not clearly outlined. There is guidance available in the Help section of the site, where it is stated that personal user accounts may be disabled for the following reasons: 31

• Continued prohibited behavior after receiving a warning or multiple warnings from Facebook

• Unsolicited contact with others for the purpose of harassment, advertising, promoting, dating, or other inappropriate conduct

• Use of a fake name

• Impersonation of a person or entity, or other misrepresentation of identity

• Posted content that violates Facebook’s Statement of Rights and Responsibilities (this includes any obscene, pornographic, or sexually explicit photos, as well as any photos that depict graphic violence. We also remove content, photo or written, that threatens, intimidates, harasses, or brings unwanted attention or embarrassment to an individual or group of people)

What is unstated, however, is how Facebook monitors and responds to such activity.

It would not be feasible for Facebook employees to monitor every user account for TOS violations. Facebook does, however, offer a function through which users may report one another with the simple click of a button. The company has not spoken publicly about how this process works, but one hypothesis is that when a critical mass of users reports a profile, that profile is automatically disabled, possibly for later review by a staff member.

There is some evidence to support such a hypothesis; in June 2010, a group called “Boycott BP” was deactivated from Facebook. News of the deactivation, which was reported on CNN’s citizen journalism site, iReport,32 spread quickly. On June 30, CNN reported that the group had been restored, and offered an explanation from Facebook that the group had been disabled by their “automated systems.”33 And in July 2010, following the removal of a post made by Sarah Palin, allegedly due to multiple reports made by other users,34 Facebook stated publicly that the post was “removed by an automated system.” 35

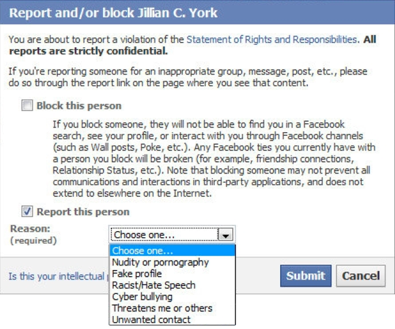

The process for reporting a user is simple; in the bottom left hand corner of each Facebook profile, there is a link which reads “Report/Block this person.” A user may choose to block another user, preventing that user from accessing his or her profile, or he may choose to report the user, as per the image below (name has been removed).

Facebook does not contact users to let them know that they have violated the TOS, rather, users learn upon attempting to access their account that they can no longer do so. A user whose account has been disabled or deactivated may appeal the decision by filling out a form available at the Facebook Help Center,36

A Pattern of Deactivations

A number of users have publicly reported the removal of their Facebook profiles or groups. The following examples are by no means exhaustive, rather, they should be considered as anecdotes indicative of a broader problem.

Najat Kessler is a US-based activist whose personal Facebook profile was recently deactivated. She believes that statements she made critical of Islam were what prompted the deletion, however, when she queried Facebook about the deletion, they responded with an e-mail that read:

At this time, we cannot verify the ownership of the account under this address. Please reply to this email with a scanned image of a government-issued photo ID (e.g., driver’s license) in order to confirm your ownership of the account. Please black out any personal information that is not needed to verify your identity (e.g., social security number). Rest assured that we will permanently delete your ID from our servers once we have used it to verify the authenticity of your account.

Please keep in mind that fake accounts are a violation of our Statement of Rights and Responsibilities. Facebook requires users to provide their real first and last names. Impersonating anyone or anything is prohibited.

In addition to your photo ID, please include all of our previous correspondence in your response so that we can refer to your original email. Once we have received this information, we will reevaluate the status of the account. Please note that we will not be able to process your request unless you send in proper identification. We apologize for any inconvenience this may cause.

Thanks,

Dominique

User Operations

Kessler responded by sending a scan of her driver’s license; one month later, she had not received a response, nor had her account been reactivated.

The platform’s ‘real name’ policy, though perhaps created with good intentions, is inconsistently enforced, often resulting in the removal of users with “unique” names, such as Najat Kessler, while users with obviously fake names (a search for “Santa Claus” returns more than 500 results) are allowed to remain.

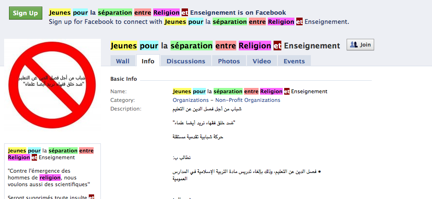

Kacem El Ghazzali, a Moroccan blogger who identifies as an atheist, also recently had his account removed, as well as a group of youth calling for the separation of religion and state in the Arab world (see cached image below); the group was reinstated within a few days, however, El Ghazzali’s profile was not.

There also exists a group seemingly created for the sole purpose of deactivating the profiles of Arab atheist users of Facebook.37 The group, whose name translates from Arabic to “Together to close all atheist pages on Facebook,” calls for its members to report profiles of atheist users.

While several users who claim to be critical of Islam have reported their accounts as disabled, so have users involved in other, unrelated causes. Yau Kwan Kiu is an activist who wrote an open letter to Facebook founder Mark Zuckerberg on Facebook,38 in which he claimed that, while his group was still permitted to exist on the site, members were not able to post to it or edit it in any way:

“We are users of Facebook with shared interests in the political development and democracy of Hong Kong and China. We have set up a political nature group page named ‘Never Forget June the Forth’ [sic]. This group was formed to engage in a free exchange of ideas and remembrances around Tiananmen Square in the spring of 1989, and there has never been an intention to be abusive, or bullying or “take any action on Facebook that infringes or violates someone else’s right or otherwise violates the law,” to quote your own official policy.

Nevertheless, the group of key administrators and creator of ‘Never Forget June the Forth’ [sic] is being unfairly harassed by Facebook, which has blocked them from posting new content and working on it at all.”

In May 2010, controversy erupted following the creation of a group on Facebook intended presumably to promote freedom of speech by means of drawing the Muslim Prophet, entitled “Everybody Draw Mohammad Day.” The group quickly made headlines as the government of Pakistan blocked Facebook in reaction,39 followed shortly by Bangladesh,40

while the UAE and Saudi Arabia reportedly banned only the group page.

The controversy apparently caused Facebook to consider geolocationally blocking the page in Pakistan,41 a strategy which has been utilized by YouTube in Thailand in the past. In the end, however, the group was removed, though whether it was removed by its administrators or by Facebook remains unclear.42

U.S.-based groups have also complained of account deactivations. In early April 2010, Wikileaks, a site intended for the publication of leaked documents, released a classified US military video depicting the killing of several people, including two Reuters journalists, in Baghdad, from a helicopter gun-ship. The video raised awareness of and gave new credence to the project, and within a few weeks, their Facebook fan page had grown to over 30,000 members. It was then removed without warning. Facebook responded, claiming that because the page was fan-created, it violated the Statement of Rights and Responsibilities, “particularly Section 12.2, which states: ‘You may only administer a Facebook page if you are an authorized representative of the subject of the page.’”43 In the end, the page was restored.

In July 2010, Facebook user Jennifer Jajeh, a performance artist whose satirical one-woman show is entitled, “I Heart Hamas,” complained that Facebook had deactivated both her personal account and the show’s fan page. Jajeh had, months before, purchased advertisements on Facebook for the show, which she explained via e-mail to Facebook’s online sales department. An ad sales representative responded to Jajeh within 24 hours, stating “The group has been reactivated. We're sorry for any inconvenience this may have caused.” 44

Jajeh also wrote to Facebook’s disabled@facebook.com support e-mail regarding her personal account. At first, she received an e-mail from Liam, a Facebook staffer, stating:

One of Facebook's main priorities is the comfort and safety of our users. We do not allow credible threats to harm others, support for violent organizations, or exceedingly graphic content. Your violation of Facebook's standards has resulted in the permanent loss of your

account. We will not be able to reactivate your account for any reason. This decision is final.

Jajeh replied to the e-mail, explaining that the title “I Heart Hamas” represented her show and not actual support for the organization Hamas; her account was reactivated shortly thereafter.45

YouTube

Background

YouTube debuted in 2005—nearly a year after Facebook—and by mid-2006 was reported to be the fastest growing site on the Web.46 In October of that same year, Google purchased the video-sharing site for $1.65 billion in stock.47

YouTube’s rise as a tool for activists has been rapid as well; in contrast to Facebook, which only officially supports activism through its fundraising application Causes, YouTube appears to have embraced its role as an activist tool. YouTube documents political use of the site on its Citizentube blog,48 and allows users to submit activist and non-profit videos to its Agent Change account for promotion on the site. 49

Over the course of the past few years, media reports of activists using YouTube have cropped up around the world. The rise of video activism is unsurprising: video images transcend languages, and as video cameras have become inexpensive and easy to operate, so have they become ubiquitous in activist communities.

YouTube’s TOS and Community Guidelines

YouTube offers a comprehensive TOS written in legal terms,50 as well as a more user-friendly set of Community Guidelines.51 The Guidelines are written clearly and comprehensively, and detail various behaviors that may result in consequences for users of the site. Among the things YouTube seeks to keep off the site are sexually explicit content, animal abuse, drug abuse, underage drinking and smoking, gratuitous violence, and hate speech. In addition, YouTube states that it reserves the right to ban members who engage in “predatory behavior, stalking, threats, harassment, intimidation, invading privacy, revealing other people’s personal information, and inciting others to commit violent acts or to violate the Terms of Use.”52 YouTube also explicitly asks users to respect copyright.

YouTube also offers tips for users tailored to each aspect of the Guidelines, in order to help users distinguish appropriateness of their content; for example, a tip regarding nudity states:

“Most nudity is not allowed, particularly if it is in a sexual context. Generally if a video is intended to be sexually provocative, it is less likely to be acceptable for YouTube. There are exceptions for some educational, documentary and scientific content, but only if that is the sole purpose of the video and it is not gratuitously graphic. For example, a documentary on breast cancer would be appropriate, but posting clips out of context from the documentary might not be.”

Community Guidelines Enforcement

YouTube is clear about the manner in which it enforces Community Guidelines violations. Flagged videos are placed in a queue to be reviewed by YouTube staff. Videos that violate the Community Guidelines are removed. Videos that do not violate the Guidelines but are not appropriate for a wide audience might be age-restricted. In rare cases, such as when a video contains content that is legal in the United States but illegal in another country, YouTube will use geolocational filtering to block the video from users in the country in question.53 Repeat TOS offenders may have their accounts removed.

Research in 2008 by the OpenNet Initiative and MIT Free Culture project YouTomb reported the mechanism used by YouTube to prevent videos criticizing the Thai king from being accessed by ISPs within the country.54 This was a negotiated settlement between YouTube and the government of Thailand to replace the Thai government’s blockage of the entire site.5556

YouTube offers a path of recourse for users who feel that their content has been wrongly removed via the site’s Safety Center, which provides a query form through which users can request more information about the removal of a video or account.57

Nevertheless, some activists have complained of having their videos and accounts wrongfully terminated. In 2007, Egyptian anti-torture activist Wael Abbas experienced account deactivation after he posted a video of two police officers sodomizing an Egyptian bus driver with a stick following a dispute.58 Although the video led to the conviction of the two officers in Egypt, YouTube initially stood by their decision, but eventually Abbas’s account, and the videos in question, were restored.59

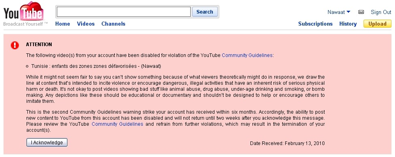

In February 2010, Tunisian dissident Sami Ben Gharbia experienced a similar incident, following his posting of a video that depicted a group of Tunisian youth sniffing glue. Ben Gharbia described the video as “a group of 6-7 year old Tunisian kids inhaling glue and talking about why and from where they’re getting the substance. Sniffing glue, which is considered gateway drug, is a very dangerous practice among Tunisian teens and kids from disadvantaged areas.”60

In response to the video, YouTube explained that any depiction of illegal activity should be educational or documentary and should not be designed to help or encourage others to imitate the activity.

Shortly after the story was made public on several blogs, YouTube restored Ben Gharbia’s account, as well as the video in question.

Ben Gharbia makes the case that another video61 hosted on YouTube of the death of Iranian Neda Agha Soltan, which news site Mashable called “too distressing to ignore,”62 was allowed to remain without question, while his video—similarly distressing—was not. The incident demonstrates the importance of considering context when dealing with activist groups.

On April 27, 2010, BBC reported that a video of activist performer M.I.A. had been removed for its depiction of violence.63 The video, which depicts fictional violence by soldiers toward redheads, was called “a form of political protest” by journalist James Montgomery.64

Flickr

Background

Flickr was founded by Canadian company Ludicorp in 2004 and purchased by Yahoo! in early 2005.65 As the cost of digital cameras decreased throughout the decade, Flickr’s popularity continued to rise and by 2010 was consistently ranked among the best photo-sharing sites on the Web.

Although still images are generally less effective than video for activism, they are often easier to capture and require less of an investment, both in terms of equipment and time, and may involve lower risk for activists. Photographs can offer a powerful complement to other campaign materials.

Photographs taken by activists and citizen journalists have been shown to be a powerful means of communication. Another way in which Flickr has been used to support such activism is in posting solidarity photo messages. In 2008, when Moroccan blogger Mohammed Erraji was arrested,66 the campaign for his release invited people from around the world to submit photographs of themselves holding signs that read “Free Mohammed Erraji” in multiple languages. Fifty-six different photos were received, and a number were used to campaign for Erraji’s release.67

TOS

Like YouTube, Flickr offers a comprehensive TOS written in legal terms,68 as well as a user-friendly set of Community Guidelines, which outline “what to do” and “what not to do” on Flickr. Among the behaviors prohibited are uploading illegal content; failing to set filters on adult material; abusing, harassing and impersonating others; hosting graphic elements such as logos for use on other sites; using Flickr for commercial purposes; and “being creepy.”69

In addition to the Community Guidelines, Flickr offers content filters, which users are expected to use on their photos. Users must choose a safety level (safe, moderate, or restricted) and a content type (photo, video, illustration, or screenshot) for their content. Users can also set a SafeSearch preference in order to determine what they see when searching for content on Flickr.

It is these same settings which are used to filter content for users in Singapore, Hong Kong, India, and South Korea, where users are only able to see photos deemed ‘safe’ by Flickr staff. German users may only view ‘safe’ and ‘moderate’ photos.70

Appeals Process

Flickr does not appear to have a clearly defined appeals process.

In the FAQ,71 under the question “Are my photos ever deleted?,” Flickr explains that photos will not be deleted, unless the user removes the photos himself, or fails to follow the Community Guidelines.72 In the Guidelines, it is stated that: “In most circumstances, we like to give second chances, so we’ll send you a warning if you step across any of the lines listed below. Subsequent violations can result in account termination without warning.”

Although there is no official appeals process for removed content, users can contact Flickr staff via the Help page.73

Photo Removal

Flickr is often used as a platform for photos documenting human rights issues. In one such instance, Dutch photographer Maarten Dors uploaded a set of photos entitled “The Romanian Way,” which documented life in an impoverished part of Romania. One photo in the set captured a young boy smoking a cigarette,74 a violation of Flickr’s TOS. Flickr deleted the photo from Dors’ account, explaining that “Images of children under the age of 18 who are smoking tobacco is prohibited across all of Yahoo's properties.”75

Dors re-posted the photo with “no censorship!” branded across the top, and contacted the company, which responded apologetically to say, “We messed up and I'm very sorry that your photo, "The Romanian Way" was removed from your photostream. It should not have been and I'm working with the team to ensure that we have a better understanding of our policies so that they are applied correctly.”76

The story was reported by a number of media outlets and has helped to inform future decisions by Flickr staff in evaluating content.77

Twitter, created in 2006, was the first service of its kind, offering users the ability to produce short (140 character) text-based posts, otherwise known as “microblogging.”

In addition to the main site, Twitter.com, users can choose from a variety of third-party software, or can send updates via SMS (in some countries), making the site easy to use. Twitter is also difficult to block in its entirety, owing to its open API. Even if a government blocks Twitter.com, users can still send tweets via SMS and third-party applications.

Nevertheless, as Twitter’s popularity has grown, the site has been filtered in a number of places, most notably in China during the 20th anniversary of the Tiananmen Square massacre in July 2009,78 and in Iran during the 2009 election period.79 Individual Twitter accounts have also been blocked in Saudi Arabia,80 Tunisia, and Bahrain.81 In all cases, it was likely Twitter’s use by activists that prompted the blocking.

Twitter emerged as an activist tool in 2008 and has since been used to report from protests, campaign for politicians, campaign against corporations, and track elections, among other things. One notable early example is that of James Buck, a graduate student and journalist who was arrested while covering protests in Egypt and reported via Twitter that he had been arrested. His Twitter followers spread the word to his university and lawyers, which helped lead to his release.82

Twitter’s notability as an activist tool increased exponentially during Iran’s 2009 elections, when the tool was used to disseminate information from protests in Iran. The western media proclaimed this to be the ‘Twitter Revolution’.83 The U.S. Department of State notably intervened at the height of the protests to request that Twitter delay scheduled maintenance in order to accommodate Iranian time zones.84 The actions by the media and by the Department of State undoubtedly raised Twitter’s stature globally, despite criticism that the decision was a public relations stunt.85 Although the scale of usage and impact of Twitter in the Iran protests were exaggerated by many, the events in Iran brought wide attention to the use of Twitter as a potent tool for activism.

Twitter has also been used by governments to propagate certain views. For example, in January 2009, the Israeli Consulate of New York held a press conference on Twitter to field questions about Israel’s role in the 2008-2009 Gaza War,86 while the U.S. Department of State has used the Twitter platform to hold a contest for young people to share their views about democracy.87

Twitter Rules

Twitter offers simple, user-friendly TOS,88 as well as a set of Twitter Rules,89 which explicitly ban impersonation, trademark and copyright violations, the posting of private information, specific threats of violence, illegal activity, pornography, name squatting, abuse of the Twitter API, and various types of spam. Unlike many social media platforms, Twitter does not explicitly mention hate speech.

Notably, Twitter rarely intervenes in disputes between users, and often responds to complaints with the following message: “Twitter provides a communication service. As a policy, we do not mediate content or intervene in disputes between users. Users are allowed to post content, including potentially inflammatory content, provided that they do not violate the Twitter TOS and Rules.”

As a result, Twitter deactivations are uncommon and tend to happen only in the form of Digital Millenium Copyright Act (DMCA) takedowns, such as when several accounts representing characters from the television show “Mad Men” were removed.90 Other common causes of deactivations include mass following and the mass sending of direct messages, both considered to be spam.

To date, there have been no widely reported incidents of activist accounts being permanently deleted, although some users have had their accounts erroneously deleted and then restored after contacting the company.

Blogger

Background

Launched in 1999, Blogger was among the first blog-publishing tools. The company was purchased by Google in 2003,91 and has since grown to be one of the most popular blogging platforms. Blogs on this platform are by default hosted at the .blogspot domain, but Blogger also allows the use of custom domains.

Blogging is a natural extension of older forms of self-publishing such as zines, appealing to both activists who have utilized traditional forms of self-publishing, as well as those newer to the scene, for whom both the ease of self-publishing and the ability to have their writing widely read, are enticing.

Eleven years after its inception, Blogger remains one of the most popular blogging platforms globally, with an Alexa traffic ranking of 7 as of April 2010 (by comparison, the next popular blogging site, Wordpress.com, ranks 16th).92 Although Blogger is often not the first choice of bloggers who wish to remain anonymous,93 it is nevertheless a popular tool amongst activist bloggers around the world.

One example of the use of Blogger for social activism is the “Ethiopian Suicides” blog, which tracks issues of migrant workers in Lebanon.94 In this example, the blog is used as a hub for information crimes against migrant workers; the blog’s owners also utilize Twitter, Facebook, and offline tools to spread their message.

Along with numerous other blogging platforms, Blogger has been subject to filtering in several countries. In February 2006, following the publication of controversial cartoons of the Prophet Mohammed in Denmark (many of which were re-published on blogs), the Pakistani Supreme Court ordered the blocking of the entire .blogspot domain,95 resulting in the collateral blocking of tens of thousands of unrelated blogs; the cartoons were found on only 12 Blogger-hosted blogs.96 A year later, Syria blocked the .blogspot domain, though not Blogger.com, meaning that bloggers in the country could publish content, but not read content hosted at the .blogspot domain including, in many cases, their own blogs.97 The blocking of blogs hosted by Blogger has also occurred in Turkey, Myanmar, Iran, and China.

Terms of Use

Blogger has been supportive of activists. Blogger’s TOS, as well as their policies surrounding deletion and deactivation, are transparent and well defined. The first two paragraphs of Blogger’s Content Policy read as follows:

Blogger is a free service for communication, self-expression and freedom of speech. We believe Blogger increases the availability of information, encourages healthy debate, and makes possible new connections between people.

We respect our users' ownership of and responsibility for the content they choose to share. It is our belief that censoring this content is contrary to a service that bases itself on freedom of expression.

Blogger’s Content Policy outlines the platform’s various policies toward different types of speech, as well as the consequences for violating the TOS.98 For example, while illegal activities such as necrophilia and child pornography are banned outright, Blogger allows adult content to be hosted on their domain, with limitations. The policy is outlined as such: “We do allow adult content on Blogger, including images or videos that contain nudity or sexual activity. But, please mark your blog as 'adult' in your Blogger settings. Otherwise, we may put it behind a 'mature content' interstitial.”

Among the other content prohibited by Blogger are promotion of illegal activities, impersonating others, spam, and crude content without proper context.

Blogger’s Content Policy lists several courses of action they may take following a TOS violation: placing the blog behind a “mature content” interstitial; placing the blog behind an interstitial wherein only the blog’s owner may see the content; deleting the blog; disabling a user’s access to the blog; disabling a user’s access to his/her Google account; and reporting the user to law enforcement.99

Blogger Account Suspensions

Blogger frequently applies their TOS to eliminate blogs that they consider to be spam, occasionally resulting in erroneous removals. Users whose blogs have been removed for this reason are able to appeal the decision, and those that are determined to have had their blogs removed erroneously are able to regain access. Numerous examples are outlined in the Blogger Help Forums.100

Blogger-hosted blogs have also been removed for alleged copyright violations.101 In many cases, the notice and takedown process is warranted. This mechanism has also been abused by some who have used it to inappropriately request the takedown of protected speech. In one case Bill Lipold, the owner of a record company, received copyright notices for demo tracks of songs that were produced within his company and which he was thus legally entitled to post.102 As is their right, Google has urged bloggers who disagree with DMCA notifications to file a counter-notification.103

Blogger faced controversy in 2009 when model Liskula Cohen filed defamation charges against an anonymous blog hosted on the site and called Skanks in NYC that was expressly created for the purpose of posting defamatory information about Cohen. A New York state court ruled that Cohen was entitled to the blogger’s identity and ordered Google to turn it over to her so that she could prepare a defamation case.104

There are, however, no widely publicized cases of Blogger-hosted blogs being permanently removed for non-TOS violations.

“Just Take Your Content Somewhere Else”

All of the aforementioned sites are private platforms and have policies for policing content as they see fit. The impact of private platform content restrictions on freedom of expression is mitigated where users have other hosting alternatives; when a user loses his YouTube privileges, she has many alternative sites to choose from: Vimeo, DailyMotion, MetaCafe, and numerous others. Users who are fed up with Twitter can move to Plurk or Identi.ca. Don’t like Flickr? There’s Photobucket, Snapfish, SmugMug, and plenty of smaller players. And bloggers are already spread across numerous platforms.

Facebook, however, functions differently from all of the above in that it is not just a content hosting platform, but also a social networking platform. Whereas a user who wants to express unpopular ideas in a blog has the option to move to an alternative platform or host his own content, a user of Facebook cannot simply take his network elsewhere.

On April 21, 2010, Facebook founder Mark Zuckerberg announced a new technology, a Web-wide “like” button that would allow for seamless integration between Facebook and the rest of the Web.105 Following the announcement, popular tech blog TechCrunch ran a headline that read, “I Think Facebook Just Seized Control of the Internet.”106 While this may well be exaggerated, after six years of operation, Facebook may well have succeeded in becoming irreplaceable for many of its users.

Facebook is rapidly overtaking its competitors in many markets. Across the Asia Pacific region, for example, Facebook holds the top spot amongst social networking sites in every country except for Japan, Taiwan, and South Korea, where local competitors won out, and India, where Orkut still rules.107 In the United States, Facebook has overtaken social networking MySpace in Web traffic, after years of coming in second. Nevertheless, the presence of other similar social networking sites does not mean that users have a viable alternative to Facebook; users follow their friends, and if their friends are moving to Facebook, it seeks to reason that they will follow.108 That point is echoed by sociology professor Zeynep Tufekci, who argues that not having a Facebook profile as a college student is “tantamount to going around with a bag over your face; it can be done, but at significant social cost.”109

The success and character of Facebook effectively renders it a social utility for many segments of cyberspace, and as the number of people using Facebook steadily grows, no longer will the argument that one can “just take content somewhere else” apply. Thus, how users are allowed to organize and share content on the platform becomes an even bigger question.

Social media researcher danah boyd contends that Facebook views itself as a utility and thus a natural path would be for it to be regulated as such. Comparing Facebook to the existing situation of utilities such as water, sewer, and electricity, in the United States, boyd argues that the alternative to Facebook is “no Facebook,” implying no social networking at all. boyd also draws comparisons between Facebook and the ongoing debate surrounding broadband Internet, stating: “Facebook may not be at the scale of the Internet (or the Internet at the scale of electricity), but that doesn’t mean that it’s not angling to be a utility or quickly become one.”110

The argument that “no one leaves Facebook” is a common one, however, in May 2010, as a response to a new opt-out feature, a number of Facebook users began publicly stating a desire to leave over what they call lax privacy controls.111 One site called for users to leave the site, and over 11,000 signed up to do so. It is not clear how many followed through with their promise.112 Even if they all were to have left, the numbers are small compared to the overall number of users on the site, which at the time of publication was over 400 million.113 Facebook responded to the backlash over privacy by changing their privacy features.114

Social Media: Private Companies, Public Responsibilities

The Universal Declaration of Human Rights posits freedom of speech as an essential and universal freedom.115 The rights to free expression and peaceable assembly are also guaranteed by the First Amendment of the United States Constitution, but do not extend to privately-owned spaces, whether online or off. Offline, this limitation has been challenged several times in U.S. courts, to varying results. Though these cases are beyond the scope of Facebook, there are similarities nonetheless.116

One early attempt to provide a constitutional basis for protection of First Amendment rights on private property was the case of Marsh v. Alabama (1946), in which the Supreme Court held that “owners and operators of a company town could not prohibit the distribution of religious literature in the town's business district because such expression was protected by the First and 14th amendments.”117

Another example can be found in a case involving a shopping mall. New Jersey Coalition Against War in the Middle East v. J.M.B. Realty Corp. (1994) established the right of individuals to hand out protest literature in one of the state’s shopping malls. The Coalition, which had been asked to leave various New Jersey malls on account of their trespassing, took their fight to court and won, based on the assertion that the mall owners “have intentionally transformed their property into a public square or market, a public gathering place, a downtown business district, a community.118

Referencing these examples, Zeynep Tufekci argues that Facebook’s latest developments fit into the historical trend of “privatization of our publics.” She argues that Facebook’s violations extend further, however, and constitute not only privatization of public spaces, but also privatization of what should be private spaces (e.g., personal spaces existing on a platform owned by someone else), stating that the issue is essentially a lack of legal protections being carried over to a new medium.119 To illustrate her point, Tufekci writes:

The correct analogy to the current situation would be if tenants had no rights to privacy in their homes because they happen to be renting the walls and doors. This week, you are allowed to close the door but, oops, we changed the terms-of-service.

Tufekci’s argument is echoed by the various U.S. court cases involving freedom of speech within malls. In such cases, the argument is made that the increasing prevalence of shopping malls over the past century, coupled with the decline of traditional town squares means that, in order to reach a wider audience (i.e., to pass out flyers), one must go to the mall. The debate in these cases, then, is often about whether there is an alternative venue, and whether or not utilizing such a venue is burdensome to the individual.

Though it could certainly be argued that alternative venues to Facebook are disappearing, a legal remedy to such issues seems highly unlikely. A more likely outcome is that Facebook will continue to make the rules, and users will continue to protest and—with some luck—help shape those rules.

Conclusion

Privately operated Internet platforms play a vital role in online communication around the globe. Therefore, efforts of these online platforms to police content on their sites have a substantial impact on free expression. As users flock toward popular social media sites such as Facebook and Twitter, they are effectively stepping away from public streets and parks and into the spaces analogous and similar in some respects to shopping malls—spaces that are privately owned and often subject to stringent rules and lacking in freedoms.

The most popular Internet platforms—those cited in this paper as well as several others—do not define permissible speech according to the legal standards of the country in which they are based, or any other. Instead, they ban some speech that would enjoy legal protection in many countries while allowing speech not tolerated in other jurisdictions. Some are acting in the spirit of the United States’ first amendment, fighting to allow speech that is deeply offensive.

But balancing freedom of speech against other social objectives is complex and there are no easy solutions. The five companies cited in this paper are all US companies, but are frequently under pressure by foreign governments to restrict certain areas of speech. They are struggling to balance business decisions with legal and social pressures spanning numerous jurisdictions and dramatically different conceptions of acceptable speech. There is no obvious solution to such conflicting standards and frequently the result to such disputes comes in the form of blocking by foreign governments, which in some cases means individual blogs or videos, and in other cases, entire platforms.120

In addition, companies sometimes dodge such pressures by relying on their users to police their sites. The standards that these private platforms apply are generally pragmatic, highly subjective and under continual review and refinement.

The question of who should determine what is acceptable speech on these quasi-public areas has no clear answer, nor is there an obvious way to determine the extent to which allowable content standards should be tailored to individual countries or regions. Legal solutions are unlikely, if not impossible, and misunderstanding and conflict inevitable.

Ultimately, there are no right answers to the standards that should be applied across diverse jurisdictions and social climates, however, processes can be improved. If companies want to gain trust amongst users, they need to be aware of the human rights implications of their policies and develop systems to resolve issues between activists—as well as average users—and companies.

Also important is transparency: Even where users will disagree with the ultimate decisions taken by private companies, an open and clear process leaves little room for complaint. Companies must addresses challenges openly and invest sufficient resources to deal with human rights issues.

Of course, as such spaces continue to grow, and particularly if they remain unregulated, the onus falls on users to consider their own needs when it comes to quasi-public spaces online and act accordingly, which may mean reconsidering approaches to privacy and transparency, or leaving such platforms altogether. Users can also engage more actively with company administrators to improve policies.

Notes

- 1. Ethan Zuckerman, Access Controlled: The Shaping of Power, Rights, and Rule in Cyberspace, MIT Press, 2010: 83.

- 2. Jack L. Goldsmith and Tim Wu, Who Controls the Internet?: Illusions of a Borderless World, Oxford University Press, 2006: 10.

- 3. Layal Abdo, “Morocco’s ‘video sniper’ sparks a new trend,” Menassat, November 12, 2007, http://www.menassat.com/?q=en/news-articles/2107-moroccos-video-sniper-s....

- 4. Yahoo! Media Relations, “Yahoo! Enhances Commerce Sites for Higher Quality Online Experience,” January 2, 2001, http://docs.yahoo.com/docs/pr/release675.html.

- 5. OpenNet Initiative, “YouTube Does Brazil,” January 10, 2007, http://opennet.net/blog/2007/01/youtube-does-brazil.

- 6. CNN, “YouTube Blocked in China,” March 26, 2009, http://www.cnn.com/2009/TECH/ptech/03/25/youtube.china/index.html.

- 7. Committee to Protect Bloggers, “YouTube Blocked in Syria,” August 30, 2007, http://committeetoprotectbloggers.org/2007/08/30/youtube-blocked-in-syria/.

- 8. OpenNet Initiative, “YouTube and the rise of geolocational filtering,” March 13, 2008, http://opennet.net/blog/2008/03/youtube-and-rise-geolocational-filtering.

- 9. Ethan Zuckerman, “How a Pakistani ISP Shut Down YouTube,” My Heart’s in Accra, February 25, 2008, http://www.ethanzuckerman.com/blog/2008/02/25/how-a-pakistani-isp-briefl....

- 10. OpenNet Initiative, “Turkey and YouTube: A Contentious Relationship,” August 29, 2008, http://opennet.net/blog/2008/08/turkey-and-youtube-a-contentious-relatio....

- 11. Robin Wright, “In Iran, One Woman’s Death May Have Many Consequences,” TIME, June 21, 2009, http://www.time.com/time/world/article/0,8599,1906049,00.html.

- 12. Flickr, Content Filters FAQ, http://www.flickr.com/help/filters/ [last accessed April 21, 2010].

- 13. OpenNet Initiative, “YouTube and the rise of geolocational filtering,” March 13, 2008, http://opennet.net/blog/2008/03/youtube-and-rise-geolocational-filtering.

- 14. Nart Villeneuve, “Breaching Trust: An analysis of surveillance and security practices on China’s TOM-Skype platform,” Information Warfare Monitor, October 1, 2008, http://www.nartv.org/mirror/breachingtrust.pdf.

- 15. BBC News, “Jail for Facebook spoof Moroccan,” February 23, 2008, http://news.bbc.co.uk/2/hi/africa/7258950.stm.

- 16. Vauhini Vara, “Facebook Denies Role in Morocco Arrest,” The Wall Street Journal, February 29, 2008, http://online.wsj.com/article/SB120424448908501345.html.

- 17. Sky Canaves, “Bejing Revises Law on State Secrets,” The Wall Street Journal, April 29, 2010, http://online.wsj.com/article/SB1000142405274870357250457521394409802269....

- 18. Official Google Blog, “Google in China,” January 27, 2006, http://googleblog.blogspot.com/2006/01/google-in-china.html.

- 19. Google, Government Requests FAQ, http://www.google.com/governmentrequests/faq.html.

- 20. Facebook.com, “Statistics,” http://www.facebook.com/press/info.php?statistics [accessed September 20, 2010].

- 21. Dan Schultz, A DigiActive Introduction to Facebook Activism, DigiActive, 2008, http://www.digiactive.org/wp-content/uploads/digiactive_facebook_activis....

- 22. Pink Chaddi Campaign, http://thepinkchaddicampaign.blogspot.com/.

- 23. Namita Malhotra and Jiti Nichani, “Cyber Activism, Social Networking and Censorship in India: through the lens of the Pink Chaddi Campaign,” (working paper, ONI Asia, 2010).

- 24. Gaurav Mishra, “The Perils of Facebook Activism: Nisha Susan Locked Out of Pink Chaddi Campaign's Facebook Group,” Global Voices Advocacy, April 18, 2009, http://advocacy.globalvoicesonline.org/2009/04/18/the-perils-of-facebook....

- 25. Caroline McCarthy, “Facebook: Relax, we won’t sell your photos,” CNet, February 16, 2009, http://news.cnet.com/8301-13577_3-10165190-36.html?tag=newsLeadStoriesAr....

- 26. Facebook TOS, December 21, 2009 revision, http://www.facebook.com/press/info.php?statistics#!/terms.php?ref=pf.

- 27. Facebook TOS, December 21, 2009 revision, http://www.facebook.com/press/info.php?statistics#!/terms.php?ref=pf.

- 28. Facebook TOS, December 21, 2009 revision, “Registration and Security,” http://www.facebook.com/press/info.php?statistics#!/terms.php?ref=pf.

- 29. Facebook TOS, December 21, 2009 revision, “Protecting Other People's Rights,” 5.2., http://www.facebook.com/press/info.php?statistics#!/terms.php?ref=pf; “this Statement refers to the entire stated TOS.”

- 30. Facebook.com, “How to Appeal Claims of Copyright Infringement,” http://www.facebook.com/legal/copyright.php?howto_appeal=1.

- 31. Facebook.com, Help Center, “I was blocked or disabled,” http://www.facebook.com/help/?page=1048.

- 32. CNN iReport, “Facebook has Deleted Boycott BP, Leaving Almost 800,000 Fans Hanging,” June 28, 2010, http://ireport.cnn.com/docs/DOC-466703.

- 33. CNN, “Facebook ‘Boycott BP’ Page Disappeared,” June 30, 2010, http://edition.cnn.com/video/data/2.0/video/tech/2010/06/30/levs.bp.boyc....

- 34. Nancy Scola, “Tumblr’s ‘Reblog’ Used to Game Facebook into Deleting Palin,” techPresident, July 23, 2010, http://techpresident.com/blog-entry/tumblrs-reblog-used-game-facebook-de....

- 35. Martina Stewart, “Facebook Apologizes for Deletion of Palin Post,” CNN Political Ticker, July 22, 2010, http://politicalticker.blogs.cnn.com/2010/07/22/facebook-apologizes-for-....

- 36. Facebook.com, Help Center, “My Personal Profile was Disabled,” http://www.facebook.com/help/?page=1048#!/help/contact.php?show_form=dis.... or by writing to “disabled@facebook.com.”

- 37. http://www.facebook.com/group.php?v=wall&ref=mf&gid=363734422063 [last accessed April 13, 2010]

- 38. Yau Kwan Kiu, “Political Censorship in Topics Related to Hong Kong and China,” http://www.facebook.com/topic.php?uid=68310606562&topic=13807 [last accessed April 13, 2010]

- 39. Charles Cooper, “Pakistan Bans Facebook Over Muhammad Caricature Row,” CBS News, May 19, 2010, http://www.cbsnews.com/8301-501465_162-20005388-501465.html.

- 40. Wall Street Journal, “Bangladesh Blocks Facebook Over Muhammad Cartoons,” May 30, 2010, http://online.wsj.com/article/SB1000142405274870425400457527559272683936....

- 41. John Ribeiro, “Facebook Considers Censoring Content in Pakistan,” PC World, May 20, 2010, http://www.pcworld.com/article/196783/facebook_considers_censoring_conte....

- 42. Dan Goodin, “’Draw Mohammad Day’ Page Removed from Facebook,” The Register, May 21, 2010, http://www.theregister.co.uk/2010/05/21/facebook_pakistan/.

- 43. Adrian Chen, Wikileaks Claims Facebook Deleted Their Fan Page Because They "Promote Illegal Acts," Gawker.com, April 20, 2010, http://gawker.com/5520933/wikileaks-claims-facebook-deleted-their-fan-pa....

- 44. Jennifer Jajeh to Facebook Online Support, July 2, 2010, e-mail.

- 45. Jennifer Jajeh to Facebook Online Support, July 2, 2010, e-mail.

- 46. Gavin O’Malley, “YouTube is the Fastest Growing Website,” AdAge, July 21, 2006, http://adage.com/digital/article?article_id=110632.

- 47. Paul R. LaMonica, “Google to buy YouTube for $1.65 billion,” CNNMoney.com, October 9, 2006, http://money.cnn.com/2006/10/09/technology/googleyoutube_deal/index.htm?....

- 48. YouTube Citizentube Blog, http://www.citizentube.com/.

- 49. YouTube, Agent Change, http://www.youtube.com/user/agentchange.

- 50. YouTube TOS, http://www.youtube.com/t/terms [accessed April 22, 2010].

- 51. YouTube Community Guidelines, http://www.youtube.com/t/community_guidelines.

- 52. Ibid.

- 53. YouTube Help Center, Account and Policies, http://www.google.com/support/youtube/bin/answer.py?hl=en&answer=92486 [last accessed April 22, 2010].

- 54. OpenNet Initiative, “YouTube and the rise of geolocational filtering,” March 13, 2008, http://opennet.net/blog/2008/03/youtube-and-rise-geolocational-filtering.

- 55. Google, Government Requests, http://www.google.com/governmentrequests/ [last accessed April 22, 2010].

- 56. Google’s recently released Government Requests page indicates that the Thai government has made fewer than 10 requests to Google or YouTube, and that Google has complied with all of them.

- 57. YouTube Safety Center, Video Removal Information, http://www.google.com/support/youtube/bin/answer.py?answer=136154 [last accessed April 22, 2010].

- 58. CNN, “YouTube shuts down Egyptian anti-torture activist’s account,” November 29, 2007, http://www.cnn.com/2007/WORLD/meast/11/29/youtube.activist/index.html.

- 59. Hubpages, “Misr Digital: Graphic Videos Restored on YouTube,” http://hubpages.com/hub/MISR-Digital-Graphic-Videos-Restored-on-You-Tube.

- 60. Sami Ben Gharbia, “Google has disabled the ability of Nawaat to upload new videos,” Nawaat, February 15, 2010, http://www.nawaat.org/portail/2010/02/15/google-has-disabled-the-ability....

- 61. Sami Ben Gharbia, “Google has disabled the ability of Nawaat to upload new videos,” Nawaat, February 15, 2010, http://www.nawaat.org/portail/2010/02/15/google-has-disabled-the-ability....

- 62. Pete Cashmore, “Neda: YouTube Video Too Distressing to Ignore,” Mashable, June 21, 2009, http://mashable.com/2009/06/21/neda/.

- 63. BBC Newsbeat, “M.I.A. video ‘removed by YouTube,’” April 27, 2010, http://news.bbc.co.uk/newsbeat/hi/music/newsid_10080000/newsid_10087800/....

- 64. James Montgomery, “M.I.A. Releases Brutally Graphic Video for ‘Born Free,’” MTV, April 26, 2010, http://www.mtv.com/news/articles/1637769/20100426/mia__4_.jhtml.

- 65. Jefferson Graham, “Flickr of idea on a gaming project led to photo website,” USA Today, February 27, 2006, http://www.usatoday.com/tech/products/2006-02-27-flickr_x.htm.

- 66. Jillian York, “Morocco: Blogger Arrested, Sentenced Immediately,” Global Voices Advocacy, September 8, 2008, http://advocacy.globalvoicesonline.org/2008/09/08/morocco-blogger-arrested/.

- 67. Free Mohammed Erraji account on Flickr, http://www.flickr.com/photos/helperraji/ [last accessed April 23, 2010].

- 68. Flickr Terms of Use, http://www.flickr.com/terms.gne [last accessed April 23, 2010].

- 69. Flickr Community Guidelines, http://www.flickr.com/guidelines.gne [last accessed April 23, 2010].

- 70. Flickr, Content Filters FAQ, http://www.flickr.com/help/filters/ [last accessed April 21, 2010].

- 71. Flickr FAQ, http://www.flickr.com/help/limits/.

- 72. Flickr Community Guidelines, http://www.flickr.com/guidelines.gne.

- 73. Flickr Help, http://www.flickr.com/help/.

- 74. Maarten Dors, The Romanian Way (13), Flickr, http://www.flickr.com/photos/maartend/1429385268/in/set-72157622703284206/ [last accessed April 23, 2010].

- 75. Maarten Dors, “This Was Today?”, Flickr, http://www.flickr.com/photos/maartend/1427946418/in/photostream/ [last accessed April 23, 2010].

- 76. Ibid.

- 77. Anick Jesdanun, “Rights like free speech don’t always extend online,” USA Today, July 7, 2008, http://www.usatoday.com/tech/products/2008-07-07-1933136783_x.htm?loc=in....

- 78. Mark MacKinnon, “TWEET! Twitter blocked in China,” The Globe and Mail, June 2, 2009, http://www.theglobeandmail.com/news/world/points-east/tweet-twitter-bloc....

- 79. Adam Ostrow, “Twitter is Blocked in Iran…and in the White House,” Mashable, July 24, 2009, http://mashable.com/2009/07/24/twitter-white-house/.

- 80. Reporters Without Borders, “Two human rights activists’ Twitter pages blocked,” August 24, 2009, http://en.rsf.org/saudi-arabia-two-human-rights-activists-twitter-24-08-....

- 81. Jillian York, “Tunisia and Bahrain Block Individual Twitter Pages,” Global Voices Advocacy, January 4, 2010, http://advocacy.globalvoicesonline.org/2010/01/04/tunisia-and-bahrain-bl....

- 82. Mallory Simon, “Student ‘Twitters’ his way out of Egyptian jail,” CNN, April 25, 2008, http://www.cnn.com/2008/TECH/04/25/twitter.buck/.

- 83. Evgeny Morozov, “Iran Elections: A Twitter Revolution?”, The Washington Post, June 17, 2009, http://www.washingtonpost.com/wp-dyn/content/discussion/2009/06/17/DI200....

- 84. Mike Musgrove, “Twitter Is a Player In Iran’s Drama,” The Washington Post, June 17, 2009, http://www.washingtonpost.com/wp-dyn/content/article/2009/06/16/AR200906....

- 85. Evgeny Morozov, “A Different Take on the State Department’s Twitter Request,” Foreign Policy Net Effect, June 19, 2009,

- 86. Noam Cohen, “The Toughest Q’s Answered in the Briefest Tweets,” The New York Times, January 3, 2009, http://www.nytimes.com/2009/01/04/weekinreview/04cohen.html.

- 87. U.S. Department of State Office of the Spokesman, “Tweet About Democracy,” January 7, 2010, http://www.state.gov/r/pa/prs/ps/2010/01/134861.htm.

- 88. Twitter TOS, http://twitter.com/tos [last accessed April 23, 2010].

- 89. Twitter Rules, http://help.twitter.com/forums/26257/entries/18311 [last accessed April 23, 2010].

- 90. MG Siegler, “DMCA takedown notice forces Twitter to blacklist Mad Men characters,” August 25, 2008, http://digital.venturebeat.com/2008/08/25/twitter-blacklists-mad-men-cha....

- 91. “Google Buys Pyra Labs and Blogger.com,” February 21, 2003, EContent, http://www.econtentmag.com/Articles/News/News-Item/Google-Buys-Pyra-Labs....

- 92. Alexa Site Info, Blogger.com, http://www.alexa.com/siteinfo/google.com [last accessed April 25, 2010].

- 93. Global Voices Advocacy recommends Wordpress, along with Tor, for bloggers who wish to retain anonymity, http://advocacy.globalvoicesonline.org/projects/guide/.

- 94. Ethiopian Suicides blog, http://ethiopiansuicides.blogspot.com/.

- 95. Sami Ben Gharbia, “Pakistan: Online freedom of speech as collateral damage?” Global Voices Advocacy, February 23, 2007, http://advocacy.globalvoicesonline.org/2007/02/23/pakistan-online-freedo....

- 96. Nart Villeneuve, “Pakistan Overblocking,” March 5, 2007, http://www.nartv.org/2007/03/05/pakistan-overblocking/.

- 97. OpenNet Initiative, “Country Profile: Syria,” August 7, 2009, http://opennet.net/research/profiles/Syria.

- 98. Blogger Content Policy, http://www.blogger.com/content.g.

- 99. Blogger’s host company, Google, is part of the Global Network Initiative, a multi-stakeholder group working toward to advance freedom of expression and privacy in the ICT sector, http://www.globalnetworkinitiative.org/ .

- 100. Blogger Help Forums, “My blog is labeled as Spam – what do I do?”, June 10, 2009, http://www.google.com/support/forum/p/blogger/thread?tid=33fc69199fb4214... [last accessed April 25, 2010].

- 101. Chilling Effects Clearinghouse, “Blogger,” http://chillingeffects.org/search.cgi?search=blogger.

- 102. Sean Michaels, “Google shuts down music blogs without warning,” The Guardian, http://www.guardian.co.uk/music/2010/feb/11/google-deletes-music-blogs.

- 103. Blogger Buzz (Official Blogger Blog), “A quick note about music blog removals,” February 10, 2010, http://buzz.blogger.com/2010/02/quick-note-about-music-blog-removals.html.

- 104. Citizen Media Law Project, “Cohen vs. Google,” January 6, 2009, http://www.citmedialaw.org/threats/cohen-v-google-blogger.

- 105. Barrett Sheridan, “Facebook’s Play to Take Over the Entire Internet,” Newsweek Tectonic Shifts Blog, April 21, 2010, http://blog.newsweek.com/blogs/techtonicshifts/archive/2010/04/22/facebo....

- 106. MG Siegler, “I Think Facebook Just Seized Control of the Internet,” TechCrunch, April 21, 2010, http://techcrunch.com/2010/04/21/facebook/.

- 107. ComScore Press Release, “Social Networking Habits Vary Considerably Across Asia-Pacific Markets,” April 7, 2010, http://www.comscore.com/Press_Events/Press_Releases/2010/4/Social_Networ....

- 108. JR Raphael, “Facebook Overtakes MySpace in US,” June 16, 2009, PC World, http://www.pcworld.com/article/166794/facebook_overtakes_myspace_in_us.html.

- 109. Zeynep Tufekci, “Facebook, Network Externalities, Regulation,” technosociology blog, May 26, 2010, http://technosociology.org/?p=137.

- 110. danah boyd, “Facebook is a utility; utilities get regulated,” apophenia, May 15, 2010, http://www.zephoria.org/thoughts/archives/2010/05/15/facebook-is-a-utili....

- 111. Koh Hui Theng, “FB Users Quit Over Privacy,” Straits Times, May 20, 2010, http://www.straitstimes.com/BreakingNews/TechandScience/Story/STIStory_5....

- 112. Quit Facebook Day, http://quitfacebookday.com

- 113. Facebook Statistics, http://www.facebook.com/press/info.php?factsheet#!/press/info.php?statis... [last accessed May 20, 2010].

- 114. Adam Ostrow, “Facebook Announces New Privacy Features,” Mashable, May 26, 2010, http://mashable.com/2010/05/26/facebook-new-privacy-features/.

- 115. The Universal Declaration of Human Rights, http://www.un.org/Overview/rights.html.

- 116. Each of the companies cited in this paper were founded in the United States; though some have offices or servers in other countries, they are primarily concerned with U.S. law.

- 117. Heather Stricker and Bill Kenworthy, “Assembly on Private Property: Overview,” First Amendment Center, http://www.firstamendmentcenter.org/assembly/topic.aspx?topic=private_pr....

- 118. Ibid.

- 119. Zeynep Tufekci, “Facebook: The Privatization of our Privates and Life in the Company Town,” technosociology blog, May 14, 2010, http://technosociology.org/?p=131.

- 120. OpenNet Initiative, “Social Media Filtering Map,” http://opennet.net/research/map/socialmedia.